Why Retweets Are Being Flagged

and Notices Are Increasing

Many users, including myself, have experienced content on X being flagged or notices being issued, even for retweets that weren’t original posts. This moderation appears to target posts flagged as "sensitive content" according to X’s automated systems. These systems are designed to detect potential violations of the platform's rules, such as:

Nudity or explicit material

Hate speech or harmful content

Violence or graphic content

However, the issue arises when users, like myself, are flagged for simply retweeting such content without any additional commentary or intent to violate policies. This has caused confusion and frustration among users, especially when:

There is no clear appeal process for flagged retweets.

The flagged content is relatively minor or contextually misunderstood.

Users feel that content moderation is unevenly applied, often targeting certain topics or communities more than others.

The Problem of Inconsistent Enforcement

While I’ve noticed increasing flags and notices on my account, much of the content that gets flagged seems petty or falsely accused, rather than being genuinely harmful or rule-breaking. For instance:

Some flagged posts merely highlight ongoing events or controversial topics.

Others may reflect political, cultural, or personal opinions that shouldn’t inherently fall under "sensitive content."

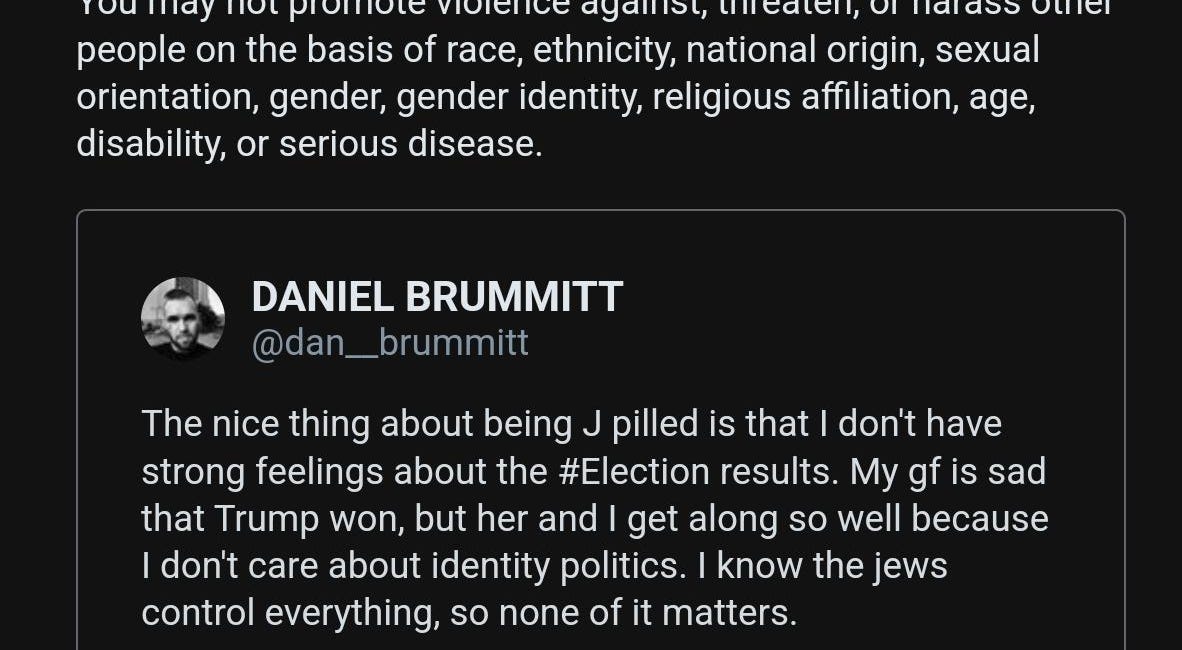

A recurring sentiment among users is that certain groups, like Jewish individuals or organizations, are overly protected on the platform, while issues such as racism, sexism, or offensive posts from high-profile users (including Elon Musk) are often left unmoderated. This creates an impression of bias or selective enforcement in X’s content moderation practices.

How to Proactively Address This Issue

To navigate these challenges and take control of your content, it’s important to adopt tools and strategies that can help manage your digital presence more effectively. Here are some recommendations:

Use Tools Like MegaBlock

A platform like MegaBlock can be invaluable for cleaning up your retweets, flagged content, and tweets containing certain keywords.

This tool allows you to efficiently "nuke" problematic tweets, ensuring your account remains compliant with platform rules.

Backup Your Tweets

Before making major changes to your account, consider creating a backup of your tweets.

X allows you to request your account data under Settings > Your Account > Download an Archive of Your Data.

Alternatively, screenshot tweets for records or save them using third-party tools to preserve context.

Be Proactive with Retweets

Carefully review content before retweeting to ensure it aligns with X’s guidelines.

When in doubt, use quote tweets instead, adding context or disclaimers to clarify your intent.

Enable Sensitive Content Settings

Go to Settings & Privacy > Privacy and Safety > Content You See and toggle on "Display media that may contain sensitive content."

This can help reduce the chances of unknowingly engaging with or retweeting flagged material.

Final Thoughts: The Path Forward for X

Elon Musk’s ambitious vision for X as a haven for free speech and innovation is being undermined—not by users, but by inconsistent moderation policies and his current handlers. Many users, myself included, are frustrated by what feels like an uneven enforcement system. Retweets are being flagged as "sensitive content" (as discussed in forums like this Reddit post), often for minor or misunderstood reasons, while overt racism, sexism, and harmful content remain widespread—even in posts from Musk himself. Strikingly, content touching on Jewish topics gets flagged or removed almost instantly, creating a perception of favoritism that’s dividing the platform.

A Reminder of Just Some of the Terrible Things Elon Musk Has Said and Done

The irony is that platforms like X succeed because of their users. Alienating them with unfair enforcement or suppressing speech in ways that feel arbitrary or biased risks driving them away. Users are already looking to alternative tools like MegaBlock to clean up their accounts, protect their digital presence, or even leave the platform altogether. And as competitors like Meta’s Grok and other AI-powered platforms become more attractive, the perception of X’s overreach will only speed up this exodus.

At the same time, the rise of tools like ChatGPT has shown how technology can evolve while respecting users' needs. Unlike X’s increasingly restrictive policies, AI tools are improving accessibility and promoting fairness. If X continues down this path, it may find itself in the same position as Meta—bleeding users to platforms that offer transparency, fairness, and consistency.

Elon, this is a moment of reckoning. The frustration isn’t just coming from one group; it’s across the board. You have the potential to steer X toward true fairness, but your current approach is alienating the very people who make the platform thrive. Biased enforcement and favoritism will only deepen resentment and accelerate a user exodus. X needs fairness, accountability, and a renewed commitment to free expression—or it risks losing its relevance altogether. The time to act is now.

#SocialMedia #Companies like #Tumblr at a crossroads.

Social Media Companies like Tumblr are at a crossroads, Not mad, just want them to know!

"Revealing Instagram's Censorship Controversy"

"Instagram Users Accuse the Platform of Censoring Posts Supporting Palestine, and I Wholeheartedly Believe Them.

The Impact of Data Suppression

In today’s age of boundless information and instant communication, platforms like Twitter play a crucial role in shaping public discourse. Yet, as many users have experienced, the way content is moderated often raises questions about fairness and the unintended consequences of over-policing speech. Recently, one of my tweets, aimed at promoting understa…

The Hypocrisy of the ADL:

As a Christian, I am both horrified and outraged by the blatant hypocrisy perpetuated by organizations like the Anti-Defamation League (ADL). An organization that claims to combat hate and foster tolerance has instead branded itself as a divisive force, mislabeling and defaming entire groups—2.4 billion Christians included—under the guise of combating e…

Pressure Tumblr To Stop Mass Terminating Our Accounts!

Pressure Tumblr To Stop Mass Terminating Our Accounts!