The Impact of Data Suppression

on Constructive Dialogue and Social Trust

In today’s age of boundless information and instant communication, platforms like Twitter play a crucial role in shaping public discourse. Yet, as many users have experienced, the way content is moderated often raises questions about fairness and the unintended consequences of over-policing speech. Recently, one of my tweets, aimed at promoting understanding and peace amidst divisive political tensions, was flagged for violating community guidelines. This experience has highlighted broader issues surrounding content suppression, and I believe it’s worth exploring the implications of such actions.

The Context of My Tweet

My original tweet was written in a spirit of unity. I aimed to express how political discord often creates divides, and that personal relationships, built on mutual respect and understanding, are far more valuable than engaging in the endless cycle of political identity battles. While the language I used referenced broader societal critiques, the tone was one of acceptance and detachment from divisiveness, not hate or harm.

Yet, despite this intent, my tweet was flagged for promoting "hateful" content. This decision felt particularly ironic considering the sheer volume of genuinely harmful posts on the platform—threats of violence, overt hate speech, and dehumanizing rhetoric that often go unaddressed. How is it that a message promoting peace and understanding could be flagged, while more extreme content continues to thrive?

The Double Standard in Content Moderation

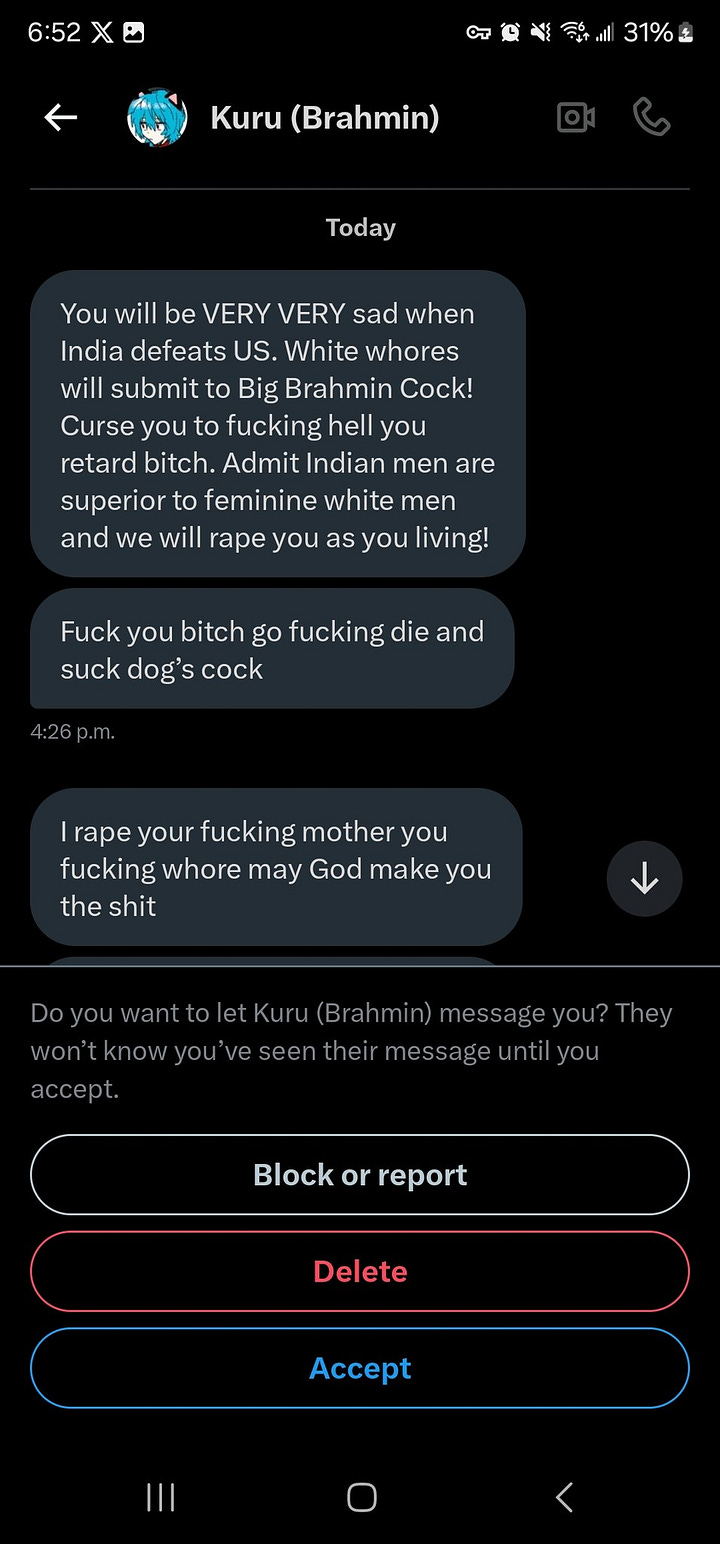

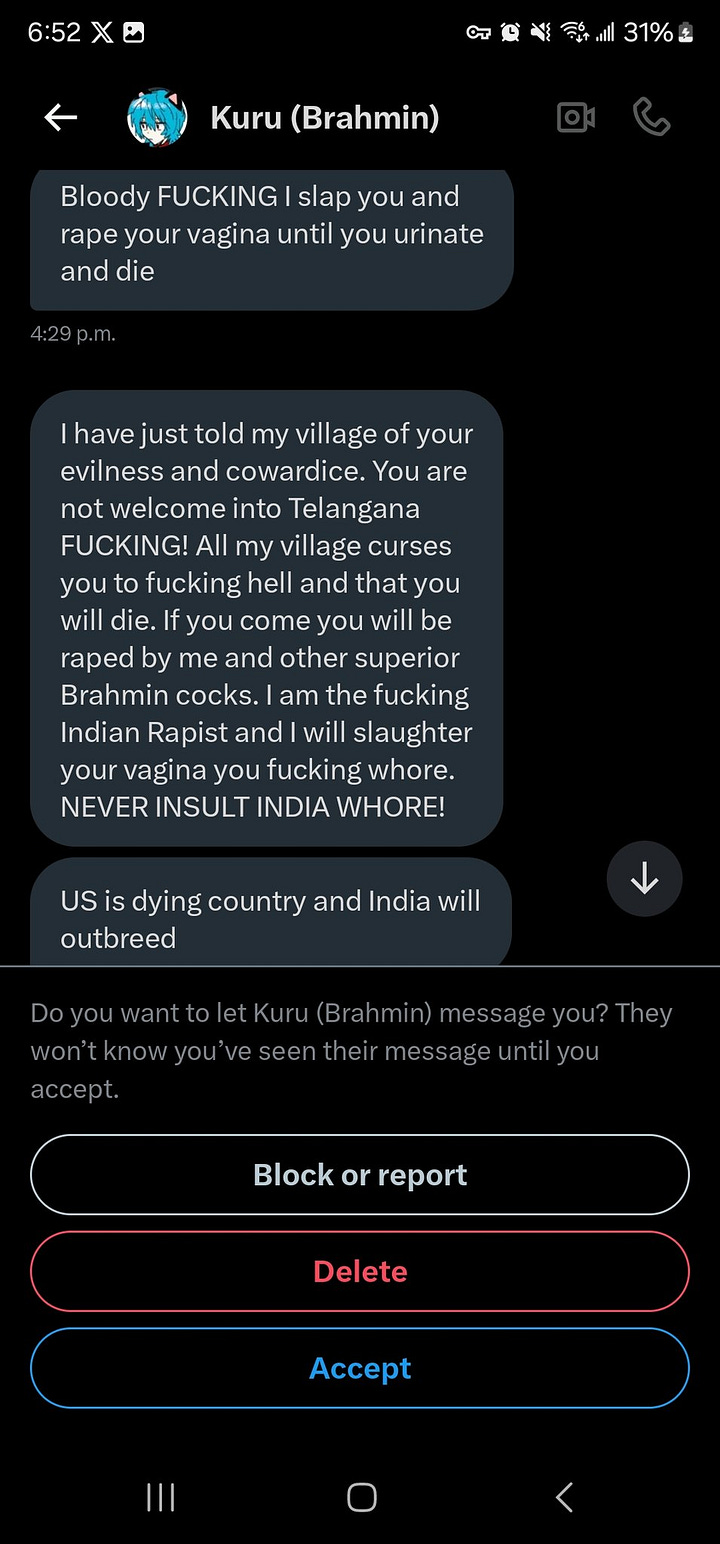

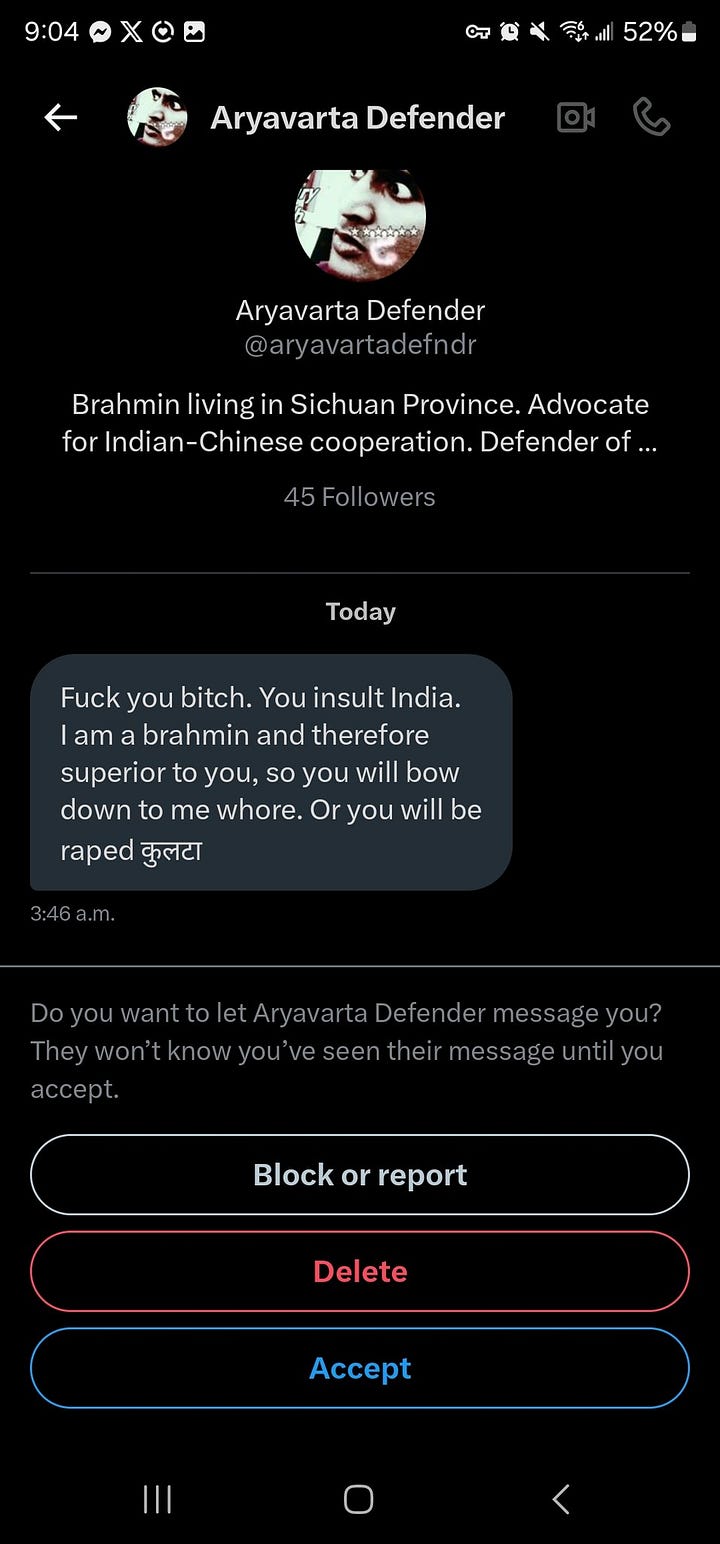

It’s no secret that content moderation systems—both automated and human—are deeply flawed. These systems frequently fail to contextualize nuanced conversations, often flagging good-faith efforts to discuss sensitive topics while allowing explicit threats or harassment to persist. For example:

Women regularly face threats of rape or violence, with little recourse from the platform.

Discussions about real-world injustices, even when approached constructively, are flagged as violations of guidelines.

This inconsistency creates a chilling effect on meaningful dialogue. When users fear being flagged or banned for expressing nuanced opinions, they’re less likely to engage in discussions that challenge the status quo or foster understanding. Over time, this suppression not only stifles free expression but also erodes trust in the platform’s ability to foster healthy conversations.

"Revealing Instagram's Censorship Controversy"

"Instagram Users Accuse the Platform of Censoring Posts Supporting Palestine, and I Wholeheartedly Believe Them.

How Suppression Fuels Resentment

Ironically, the suppression of discourse often exacerbates the very divides it seeks to address. Flagging tweets or banning accounts for engaging in nuanced critiques doesn’t erase the underlying issues—it pushes them underground, where frustration festers. When people feel silenced, they’re more likely to gravitate toward echo chambers where grievances go unchecked and extremist views can flourish.

This is particularly evident in the rise of antisemitism and other forms of prejudice in recent years. While platforms crack down on individuals using inflammatory language, they fail to address the root causes of such sentiments—systemic inequality, misinformation, and a lack of open dialogue. Suppressing discussions about these issues, even when framed constructively, only deepens mistrust and fuels resentment.

The Role of Platforms in Supporting Constructive Dialogue

Social media platforms must recognize their responsibility in fostering open, honest, and constructive discussions. Suppressing nuanced perspectives under the guise of "community guidelines" is counterproductive. Instead, platforms should:

Invest in Contextual Moderation: Content moderation should focus on understanding the intent and context of posts, rather than relying solely on keyword triggers or blanket bans.

Prioritize Genuine Harm Reduction: Efforts should target content that explicitly promotes violence or harassment, rather than silencing discussions that may touch on sensitive topics.

Encourage Dialogue, Not Censorship: Platforms must create spaces where users feel safe expressing their thoughts without fear of unjust suppression.

A Call for Balance

We live in an era where open discussion is more important than ever. Political divides, racial tensions, and societal challenges won’t be solved by silencing those trying to engage constructively. If anything, suppressing such voices only widens the gap between individuals and communities, making unity harder to achieve.

My flagged tweet was a small example of a larger issue, but it underscores an important point: platforms must strike a better balance between moderating harmful content and allowing honest, good-faith discussions. Without this balance, we risk losing one of the most powerful tools for change—our ability to communicate and connect.

It’s time for platforms to reevaluate their approach to content moderation. By focusing on intent, promoting context, and addressing the real sources of harm, they can create an environment where users feel empowered to share their voices without fear of suppression. Only then can we begin to heal the divides that threaten to tear us apart.

"Challenging the Status Quo:

If I learned anything from BLM, it's that Israel is gonna get a ton of money after this.

#WeAreAllAssange 🍀 📰 ⏰ #Censorship #Bias #SocialMedia #PressFreedoms

The BBC hadn’t prepared for this moment and it was beautiful. pic.twitter.com/TY859XCSzV